Vector embeddings are central to generative AI applications including NLP, content generation, and intelligent search. With Onehouse, vector embeddings can now be generated quickly, easily, and cost-effectively on the data lakehouse.

SUNNYVALE, CA / ACCESSWIRE / August 22, 2024 / Onehouse, the Universal Data Lakehouse company, today announced that companies that want to reduce the time and costs required to build vector embeddings for generative AI applications now have an easier, more efficient, and more scalable solution.

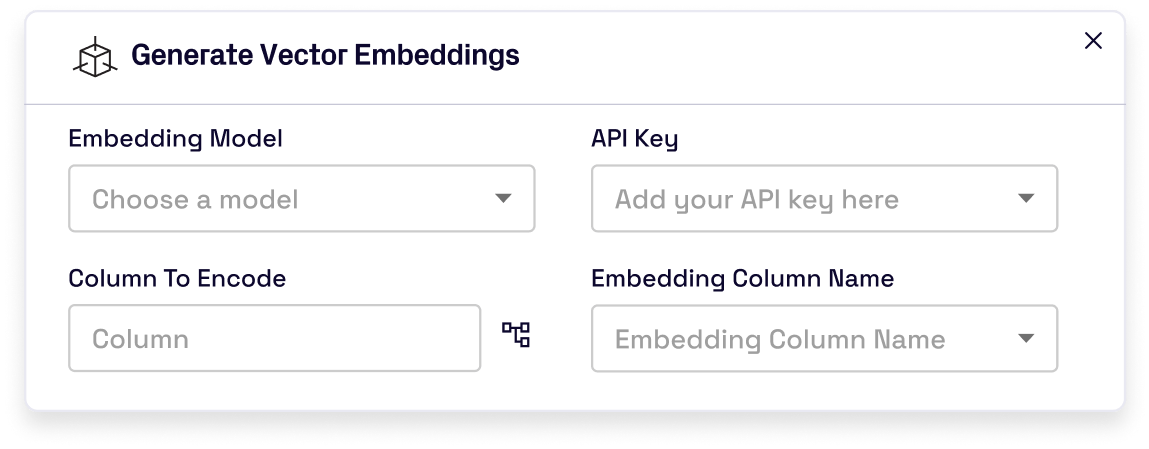

Onehouse is launching a vector embeddings generator to automate embeddings pipelines as a part of its managed ELT cloud service. These pipelines continuously deliver data from streams, databases, and files on cloud storage to foundation models from OpenAI, Voyage AI, and others. The models then return the embeddings to Onehouse, which stores them in highly optimized tables on the user's data lakehouse.

As AI initiatives accelerate, there is a growing pain around managing data across numerous siloed vector databases to power RAG applications, leading to excessive costs and wasteful regeneration of vectors. The data lakehouse with open data formats on top of scalable, inexpensive cloud storage is becoming the natural platform of choice for centralizing and managing the vast amounts of data used by AI models. Users are now able to carefully choose what data and embeddings need to be moved to downstream vector databases.

"Text search has evolved dramatically. The traditional tools have complications on their own, as in, ingress of data and egress when we would want to move out. Vector embeddings on data lakehouse not only avoids the ingress and egress complexities and cost but also can scale to massive volumes," said Kaushik Muniandi, engineering manager at NielsenIQ. "We found that vector embeddings on data lakehouse is the only solution that scales to support our application's data volumes while minimizing costs and delivering responses in seconds."

With the addition of the vector embeddings generator as a part of Onehouse's powerful incremental ELT platform, Onehouse customers can streamline their vector embeddings pipelines to store embeddings directly on the lakehouse. This provides all of the lakehouse's unique capabilities around update management, late-arriving data, concurrency control and more while scaling to the data volumes needed to power large-scale AI applications. Now, organizations have a simpler way to augment the data that AI models operate on - including audio, text, and images - with vectors that capture the relationship between data to support AI use cases.

The Onehouse product integrates with vector databases to enable high-scale, low-latency serving of vectors for real-time use cases. The data lakehouse stores all of an organization's vector embeddings and serves vectors in batch, while hot vectors are moved dynamically to the vector database for real-time serving. This architecture provides scale, cost, and performance advantages for building AI applications such as large language models (LLMs) and intelligent search.

"Data processing and storage are foundational for AI projects," said Prashant Wason, Staff Software Engineer at Uber and Apache Hudi™ Project Management Committee member. "Hudi, and lakehouses more broadly, should be a key part of this journey as companies build AI applications on their large datasets. The scale, openness and extensible indexing that Hudi offers make this approach of bridging the lakehouse and operational vector databases a prime opportunity for value creation in the coming years."

The shift to the data lakehouse is now the norm. A survey of C-suite executives, chief architects, and data scientists by MIT Technology Review and Databricks found that almost three-quarters of organizations have adopted a lakehouse architecture. Of those, 99 percent said the architecture was helping to achieve their data and AI goals.

Onehouse CEO Vinoth Chandar led the creation of Apache Hudi while at Uber in 2016. Uber donated the project to the Apache Software Foundation in 2018. Onehouse works across all popular open source data lakehouse formats - Apache Hudi, Apache Iceberg, and Delta Lake - via integration with Apache XTable™ (Incubating) for interoperability.

"AI is going to be only as good as the data fed to it, so managing data for AI is going to be a key aspect of data platforms going forward," said Chandar. "Hudi's powerful incremental processing capabilities also extend to the creation and management of vector embeddings across massive volumes of data. It provides both the open source community and Onehouse customers with significant competitive advantages, such as continuously updating vectors with changing data while reducing the costs of embedding generation and vector database loading."

If you are interested in seeing vector embeddings for AI built and managed in the data lakehouse, join the upcoming webinar with NielsenIQ and Onehouse, "Vector Embeddings in the Lakehouse: Bridging AI and Data Lake Technologies."

About Onehouse

Onehouse, the pioneer in open data lakehouse technology, empowers enterprises to deploy and manage a world-class data lakehouse in minutes on Apache Hudi, Apache Iceberg, and Delta Lake. Delivered as a fully-managed cloud service in your private cloud environment, Onehouse offers high-performance ingestion pipelines for minute-level freshness and optimizes tables for maximum query performance. Thanks to its truly open data architecture, Onehouse eliminates data format, table format, compute and catalog lock-ins, guarantees interoperability with virtually any warehouse/data processing engine, and ensures exceptional ELT and query performance for all your workloads.

Companies worldwide rely on Onehouse to power their analytics, reporting, data science, machine learning, and GenAI use cases from a single, unified source of data. Built on Apache Hudi and Apache XTable (Incubating), Onehouse features advanced capabilities such as indexing, ACID transactions, and time travel, ensuring consistent data across all downstream query engines and tools. The platform's unique incremental processing capabilities deliver unmatched ELT cost and performance by minimizing data movement and optimizing resource usage. With 24/7 reliability, immediate cost savings, and open access for all major tools and query engines, benefit from Onehouse's #nolockin philosophy to future-proof any stack. To learn more, visit https://www.onehouse.ai.

Contact Information

Forrest Carman

forrestc@owenmedia.com

(206) 859-3118

SOURCE: Onehouse

View the original press release on newswire.com.